In 1950, modern computing pioneer Alan Turing talked about the challenge of developing “machines that can think:”

"We can only see a short distance ahead, but we can see plenty there that needs to be done."

73 years later, we’re watching machines turning into thinkers, creators, and decision-makers. We see plenty that needs to be done, and we wonder:

What would our future become if we let progress step over ethics?

Can an organization tap into the immense AI potential without slipping into a moral abyss?

According to major industry players who invested billions into AI research and development, the answer is clear — the path forward demands greater effort in steering AI towards responsible use.

This blog post explores how machine learning models — ML models, — AI regulation, and ethical AI can shape a future where AI technology aligns with human life and values.

Keep reading to:

- Understand the importance of responsible AI governance in creating a fair, safe, and trustworthy AI-driven world.

- Discover the responsible AI best practices and principles as required by governance.

What is responsible AI?

Responsible AI is a paradigm shift in how we approach AI development and machine learning models. The goal is to create AI that works with ethical considerations safely and fairly.

To produce this shift towards responsible AI, humanity as a whole needs to:

- Be aware of the benefits and risks of using artificial intelligence

- Improve AI governance using universal practices and principle

The 7 principles and practices of responsible AI

Responsible AI doesn't start with governments and laws. It starts with the companies and individuals who are hands-on in developing new technology. The following principles are becoming increasingly popular as the AI industry evolves and are used by tech giants like Microsoft. Any new or existing AI development process should, in some capacity, implement these principles.

1. Transparency

Without transparency, there's no responsible AI. When we know how AI systems operate, we can understand what they can and can’t do. This data helps us make more informed decisions about the use of AI systems.

2. Fairness

AI must make the world better and more efficient and treat everyone equally. Knowing that the AI systems are fair and unbiased, we can trust they don’t discriminate against any particular group of people.

3. Accountability

Accountability is also critical to responsible AI. It applies to both developing and using every machine learning model and AI system. And it's how we can:

- Hold responsible the people who might try to use an AI system for bad.

- Prevent them from trying in the first place.

4. Privacy

Respecting people’s privacy and protecting personal data matters because training AI requires using a great amount of data that belongs to people. The more privacy-enhanced AI becomes, the more we can develop trustworthy AI technologies. And more people will be happy to use artificial intelligence.

5. Security

Security is crucial to protect sensitive data and prevent harmful bias or potential threats. In an AI system, good security makes it trustworthy and unlikely to cause unintended consequences to people.

6. Robustness

No matter how much we train AI, unexpected situations will occur. That’s why we need responsible AI systems that work well under various conditions. Systems that won't break or cause harm or unfair results.

7. Beneficial use

The goal is to make AI a responsible tool for everyone. To get there, we must create applications that help people and society. This principle allows an AI system to minimize the risk and create positive outcomes, like improving fairness and efficiency.

Why is responsible AI important?

Responsible AI best practices and principles ensure generative AI systems and models transform our lives while:

- Keeping humans in charge.

- Ensuring it does more good than harm.

AI can be a great tool, but, as Winston Churchill said, “the price of greatness is responsibility.”

After all, artificial intelligence can greatly impact our lives. Through every ML model, decision tree, natural language processing, and other computational linguistics it uses, responsible AI must serve the good. AI must align with our society’s ethics, laws, and values.

High-profile figures advocate for responsible AI

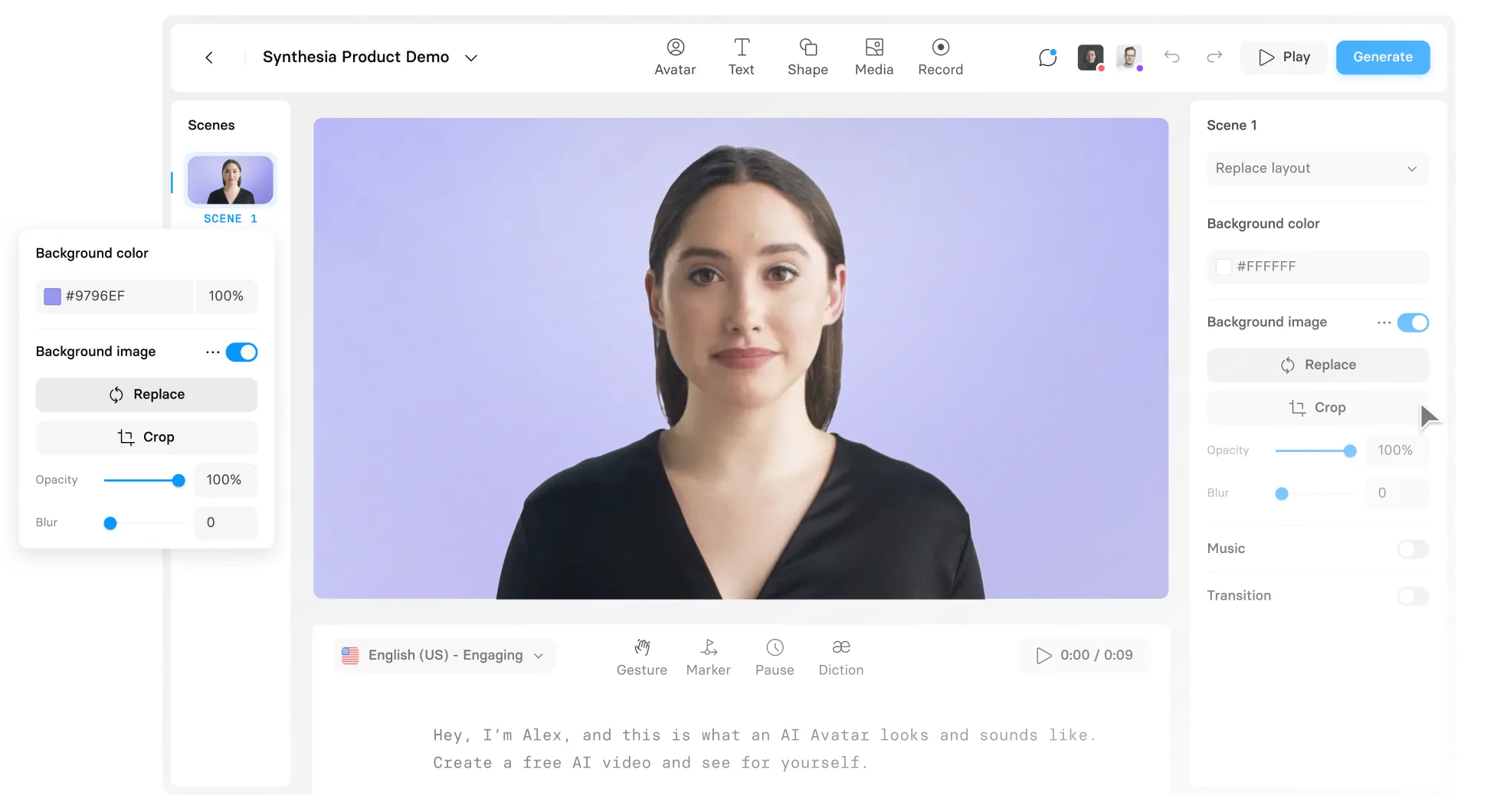

Responsible AI and machine learning is still emerging area of AI governance. However, high-profile figures have become vocal about it. UK Foreign Secretary James Cleverly recently partnered with the synthetic media company Synthesia to promote AI safety.

The politician trusted this AI video-making company to generate his likeness (avatar and voice) and use his digital twin in a video with a very clear, loud message on responsible AI:

“Keep harmful content from being generated. We want to put the right guardrails in place so that we can all benefit from the huge opportunities of AI but remain safe.”

Responsible AI prevents bias and promotes fairness

Bias is a tendency to lean in a certain direction, often based on stereotypes, not facts, leading to unfair treatment or judgment.

For any AI system, bias is a big problem. That's because an AI system can significantly impact many people's lives, amplifying and spreading views on a large scale.

When machine learning models are biased, their decisions can harm people. For example, a biased ML model might make wrong medical diagnoses, unfairly reject loan applications, or overlook qualified hiring candidates because of their background or appearance.

Promoting responsible AI becomes a must as artificial intelligence software based on machine learning and generative AI models evolves. We're tasked with setting responsible AI standards covering ethics and democratization and preventing biased decisions.

How do we keep an AI system trustworthy and unbiased?

There are 3 key ways to keep your AI system trustworthy. In a nutshell, it comes down to feeding any artificial intelligence model good, diverse data, ensuring algorithms accommodate that diversity, and testing the resulting software for mislabeling or poor correlations. Here's a bit more detail on how we can make that happen:

- Set clear computational linguistics decision-making processes by feeding ML models and pre-trained NLP models exclusively with comprehensive, high-quality data sets.

- Train developers of ML models to design algorithms that reflect a broad range of perspectives and experiences, select diverse data, and interpret data impartially.

- Train ML models and evaluate their decision-making processes with adversarial testing and gendered correlations.

Responsible AI eases technology development & adoption

There's no doubt that AI and ML models have an exponential ability to improve everything about our planet. And for us to make the most of this invention, we need a forward-looking approach that puts humans (not results) first.

In other words, responsible AI needs to be popular AI.

Tools should be accessible and beneficial to a broad range of people. And they should help people avoid real-world risks associated with biased or harmful outcomes. To do that, development processes need to comply with both existing and emerging regulations on data privacy. Developers and organizations need to be externally motivated by legislation to keep up with compliance and take responsibility for their inventions.

The need for responsible AI standardization

Nobel-prized author Thomas Mann believed “Order and simplification are the first steps toward the mastery of a subject.”

A standardized development process is, indeed, the first step to enabling an organization to create responsible AI and machine learning.

We have safety standards for cars and food. Similarly, we need AI regulation for developers to make their technologies human-centered.

With the right standards, we can:

- Protect our privacy, as every organization handles our data responsibly.

- Remove bias by promoting justice, as AI systems treat everyone equally and avoid unfair outcomes.

- Provide users with increased safety, as technologies like self-driving cars, medical diagnosis, and financial systems are reliable and safe.

Google’s responsible AI practices and principles

Google is the company that invested the highest amount of money into AI research and development.

Over the past decade, the tech giant spent up to $200 billion to create artificial intelligence applications. And they did it because they understand responsible AI principles help the organization:

- Build trust with users.

- Avoid discrimination and privacy issues.

While, like many companies, they haven’t always gotten things right, Google’s main pillar for responsible AI is transparency. They’ve published many resources on the topic, and overall, that’s a positive thing, considering that the industry titan is here to stay.

Some specific examples of Google's practices that support responsible AI principles and AI ethics include:

- Google AI Principles: Seven principles that guide continuous improvement in the company's responsible AI work.

- Google AI for Social Good program: An initiative that funds and supports research and organizations that use AI to solve social and environmental problems.

- Google Model Cards: A way for teams to document their AI models and make them more transparent.

- Google AI Explainability Toolkit: A set of tools and resources that help teams make their AI models more explainable.

- Google AI Fairness Testing Tools: A set of tools for teams to identify and mitigate bias in every AI system.

More responsible AI examples

More and more companies are actively working on implementing the principles of responsible AI. Every big name in the industry has some sort of program to reduce the risk of misusing AI and address issues like transparency and accountability.

Facebook’s five pillars of responsible AI

Facebook is an organization that uses AI in various ways, from managing News Feeds to tackling the risk of misinformation and has a Responsible AI (RAI) team. This team, alongside external experts and regulators, develops machine learning systems responsibly.

Facebook's RAI focuses on five key areas:

- Privacy & Security

- Fairness & Inclusion

- Robustness & Safety

- Transparency & Control

- Accountability & Governance

OpenAI’s ChatGPT usage policies

OpenAI's Chat GPT policies ensure AI ethics by:

- Supporting the responsible use of its models and services.

- Restricting usage in sensitive areas like law enforcement and health diagnostics.

Users must be informed about AI's involvement in products, especially in the medical, financial, and legal sectors.

Automated systems must disclose AI usage, and real-person simulations need explicit consent.

Salesforce’s generative AI 5 guidelines for responsible development

Salesforce outlines five key guidelines for the responsible development and implementation of generative AI:

- Accuracy: Demands verifiable results, advocating for using customer data in training models and clear communication about uncertainties.

- Safety: Prioritizes mitigating bias, toxicity, and harmful outputs, including thorough assessments and protecting personal data privacy.

- Honesty: Insists on respecting data provenance, consent, and transparency regarding AI-generated content.

- Empowerment: Aims to balance automation and human involvement to enhance human capabilities.

- Sustainability: Advocates for developing the right-sized AI models to reduce the carbon footprint.

IBM’s fairness tool

AI Fairness 360 is an open-source toolkit designed by IBM Research. The organization supported its implementation to help detect, report and mitigate bias in machine learning models across the AI application lifecycle.

Users can contribute new metrics and algorithms, share experiences, and learn from the community. This responsible AI toolkit offers:

- Over 70 metrics to measure individual and group fairness in AI systems.

- Algorithms addressing bias in different stages of AI systems.

- Various instruments and resources for understanding and addressing AI systems biases.

Hugging Face Responsible AI

Hugging Face is a prominent organization in the AI and NLP field. The company has consistently shown commitment to responsible AI principles and practices. Key aspects of their use of AI include:

- Open source and transparency: They're offering open-source instruments and models that attract feedback and improvement from the community, thus leading to more ethical and unbiased AI solutions.

- Community collaboration: By encouraging contributions from a diverse range of users and developers, they foster an inclusive environment.

- White box approach: Hugging Face promotes a white box approach by providing detailed documentation and interpretability tools for their models, in contrast with the black box nature of many other AI systems.

- Ethical guidelines and standards: The organization adheres to responsible AI guidelines and standards, ensuring their models and instruments consider the potential impacts on society and individuals.

How Synthesia is pioneering the use of responsible AI

Today, Synthesia is the #1 AI video creation platform. But six years ago, it was just a humble research paper by Ph.D. graduates and professors at Stanford, UCL, and Cambridge University.

So, the founding members of the organization are academics. They know how closely innovation and responsibility are tied, especially when it comes to the development process of generative AI systems. After all, Synthesia aims to simplify video creation by enabling text-to-video (TTV) processes, where lifelike synthetic humans are generated and controlled directly from a script.

In 2019, they made a virtual David Beckham speak 9 languages in a video that raised awareness of Malaria, the world's deadliest disease. The production won that year’s CogX award for “outstanding achievement in social good use of AI.”

Synthesia can create photorealistic synthetic actors, or 'AI Avatars,' and make them speak in 120+ languages using advanced AI technologies.

That’s why the company is strongly motivated to fight misuses like creating deepfake content or spreading misinformation. And they have a system of tech and human experts responsible for keeping harmful content from being generated.

Victor Riparbelli, Synthesia’s CEO, says, “As with any other powerful technology, it will also be used by bad actors. AI will amplify the issues we already have with spam, disinformation, fraud, and harassment. Reducing this harm is crucial, and it’s important that we work together as an industry to combat the threats AI presents.”

Since 2017, Synthesia has been diligently working to transform video production through responsible AI, aligning its business model with ethical best practices.

Consent

Synthesia only creates an AI avatar with the human's explicit consent. The company is dedicated to avoiding impersonations or gray areas, operating on concrete goals:

- Transparent agreements with actors for stock avatars.

- Respect for any decision to opt out.

Control

Providing a secure platform environment, Synthesia takes a strict business approach to preventing the creation and distribution of harmful content. Not only do they implement rigorous content moderation, but they also have a dedicated Trust and Safety team to ensure responsible use and data security are put into practice by every organization they work with. The company:

- Moderates every single video produced with its platform.

- Has a list of prohibited use cases in its Terms of Service that it strictly enforces.

- Offers an "on-rails" experience, meaning customers don't get access to the underlying data.

- Requests that clients use a custom avatar for political or religious content.

Collaboration

Synthesia believes in working with a diverse set of regulatory bodies and industry leaders to tackle AI challenges:

- Actively teams with media organizations, governments, and research institutions to develop best practices and educate on video synthesis technologies.

- Became a launch partner to the Partnership on AI (PAI) on Responsible Practices for Synthetic Media.

- PAI is the first industry-wide framework for the ethical and responsible development, creation, and sharing of synthetic media.

- Became an active member of the Content Authenticity Initiative.

Transparency and disclosure

Synthesia strongly believes transparency must be addressed across creation tools and distribution.

The company views public education and industry-wide collaboration as the most powerful levers for ensuring platform safety.

Future plans

The world and AI are evolving, as are industry guidelines and regulations. To keep up with it all, Synthesia is following concrete goals like:

- Verifying the credentials of any organization that wants to create news content using its technology.

- Collaborating with expert partners to curb the creation and spread of abusive content.

- Staying at the forefront of a rapid evolution in synthetic media and AI governance.

CEO Victor Riparbelli explained in his 2023 SaaStock keynote how scaling the business is more about using generative AI to provide users with utility rather than to create novelty:

The fascinating research of making photorealistic synthetic actors the ethical way

Synthesia's mission is to make video creation from text easy, safe, and responsible for everyone.

In the near future, synthetic media will altogether remove the need for physical cameras, actors, and complex video editing. You’ll most likely want your organization to join the transformation when it happens.

Meanwhile, explore the fascinating research of how Synthesia pioneers the field of privacy-enhanced synthetic video creation while closely following AI governance.